Chinese and American research teams have announced breakthrough advances in artificial intelligence chip technology, each promising dramatic leaps in speed and efficiency over existing systems. The dual unveilings mark a new front in the global contest for AI supremacy, as China debuts a brain-inspired chip independent of U.S. hardware, while American scientists push the boundaries of light-powered AI computing (South China Morning Post).

The Chinese Academy of Sciences introduced SpikingBrain1.0, billed as the world’s first “brain-like” language model capable of operating 25 to 100 times faster than standard systems by selectively activating computational pathways. Meanwhile, Microsoft researchers and a University of Florida team revealed rival photonic and analog optical chips, leveraging the properties of light to accelerate AI tasks with potentially vast energy savings.

China’s Brain-Inspired AI Sets Speed and Efficiency Milestones

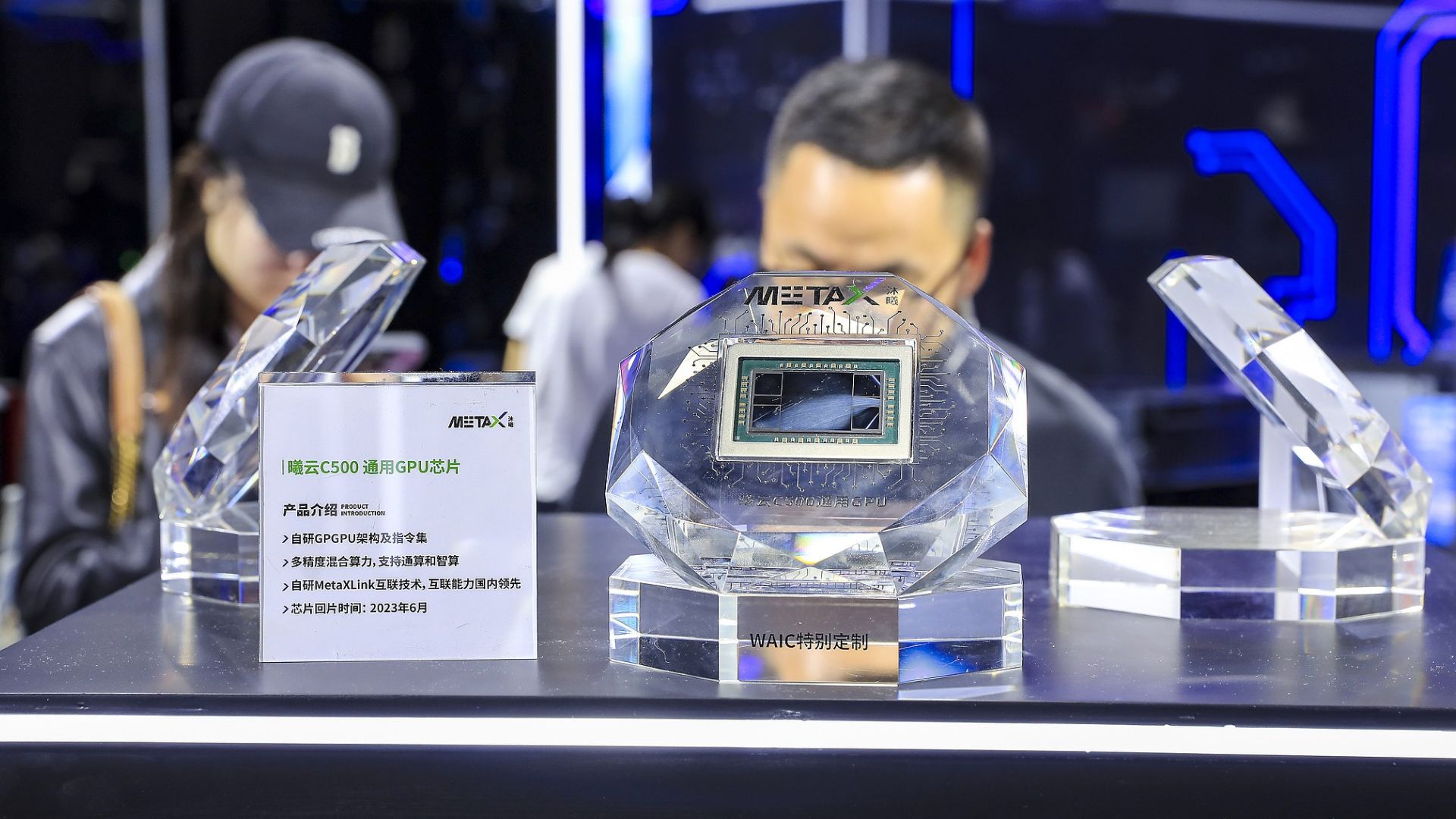

SpikingBrain1.0, unveiled by Chinese scientists, mimics the brain’s neural processing—activating only necessary synaptic networks—unlike traditional neural nets that engage all layers for every task. This approach, according to a technical paper on arXiv, enables the model to achieve similar language understanding with less than two percent of the training data required by conventional AIs and up to 26.5 times the speed on ultra-long sequence tasks (arXiv). The system runs on homegrown MetaX chips, highlighting Chinese efforts to achieve technological independence amid ongoing U.S. export controls (MetaX Integrated Circuits).

Researchers report that SpikingBrain1.0’s hybrid architecture can process million-token contexts dramatically faster than industry-standard Transformer models, potentially enabling new applications for real-time, complex language interpretation and analysis.

U.S. Teams Pioneer Light-Based AI Hardware

Microsoft’s new analog optical computer, detailed in a Nature publication and built from off-the-shelf components, uses micro-LEDs and camera sensors to process data purely with light, circumventing traditional digital-to-analog conversions. The system offers up to 100-fold greater energy efficiency for tasks such as AI inference and financial optimization, and successfully reconstructed high-quality brain images using just 62.5% of original scan data (Cosmico).

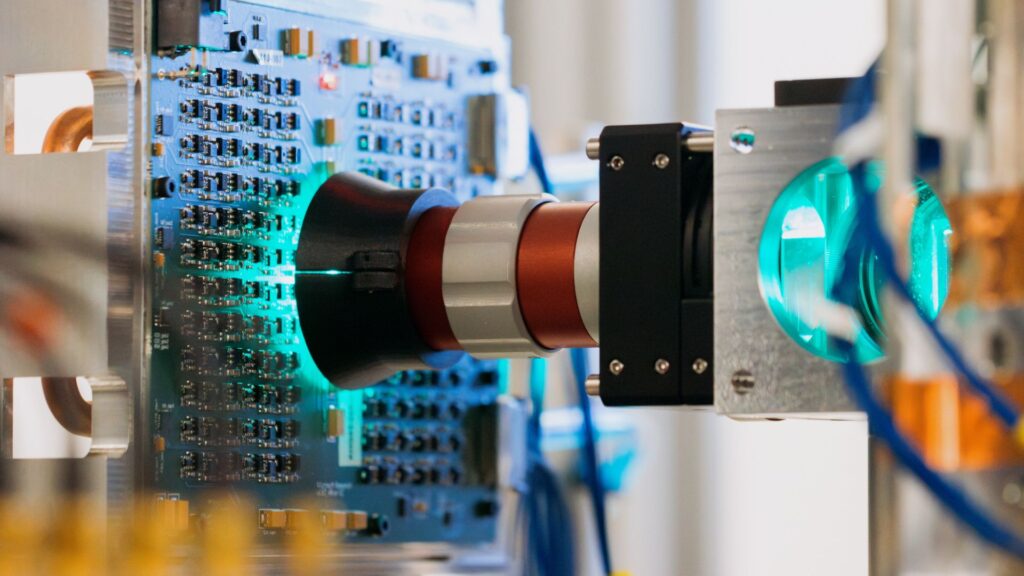

Complementing Microsoft’s advance, University of Florida researchers fabricated a silicon chip with integrated Fresnel lenses capable of handling convolution operations for deep learning using laser light. Their device achieved 98% accuracy in digit classification tests while drawing a fraction of the power required by electronic AI chips, and supports parallel processing of multiple data streams through wavelength multiplexing (University of Florida News).

Competing Paths Toward Sustainable AI

As AI systems drive soaring energy demands—data centers are projected to double power consumption by 2030—both breakthroughs point toward essential new architectures for sustainable scaling. China’s brain-inspired chip aims for autonomy and resource efficiency, while U.S. teams explore optical computing’s promise for orders-of-magnitude reductions in energy use.

Industry observers note that, while early in their development, these advances could substantially reshape the future of AI hardware, with potential ripple effects in language processing, scientific research, and large-scale data analytics worldwide.