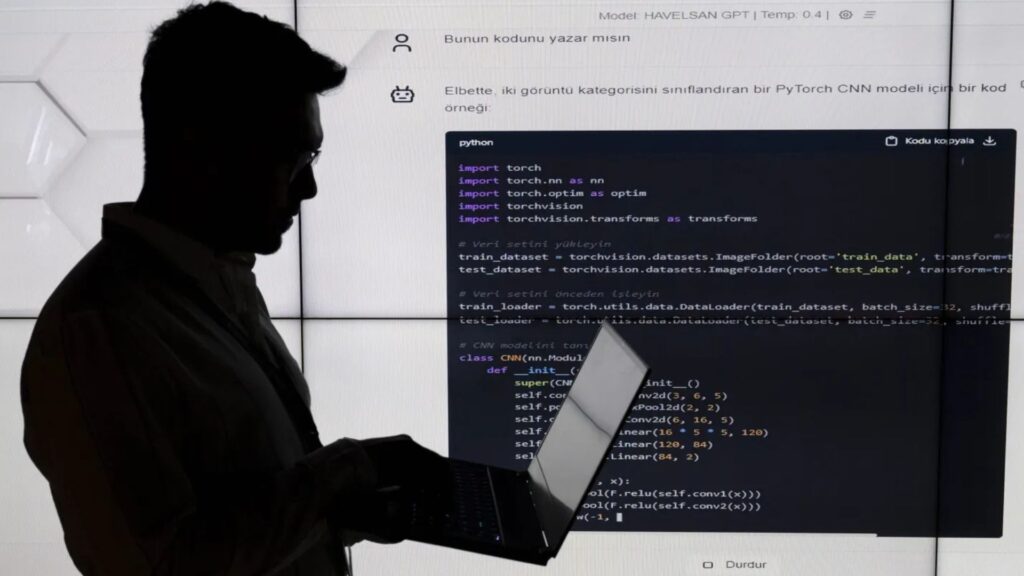

Utku Ucrak/Anadolu via Getty Images

“Vibe coding”—using conversational AI tools to translate natural-language instructions into working code prototypes—has captured Silicon Valley’s imagination, with leaders like Klarna CEO Sebastian Siemiatkowski and Meta CTO Alexandr Wang hailing it as the next revolutionary leap. But while executives tout rapid prototyping benefits, recent studies reveal that 45% of AI-generated code contains security vulnerabilities, raising serious concerns about long-term stability.

The Executive Embrace

Klarna’s Siemiatkowski described on the Sourcery podcast how he now prototypes product ideas independently in 20 minutes using AI coders like Cursor, sparing his engineers from half-baked concepts and accelerating decision-making (Economic Times). Google CEO Sundar Pichai revealed that AI already generates over 30% of new code at Google, calling it “the biggest leap in software creation in 25 years.” At Meta, Wang predicts traditional coding skills will be obsolete within five years, with AI models—and future AI-powered smart glasses—enabling “vibe coding” as the default development method.

The Security Reality Check

Veracode’s 2025 GenAI Code Security Report found that AI-generated code exhibits security flaws in 45% of cases, with models equally likely to choose insecure methods when alternatives exist—an error rate unchanged despite AI improvements (Fortune). A July 2025 Fastly survey of 791 developers revealed that 95% spend extra time fixing AI-generated code, and senior developers are twice as likely to deploy AI-generated code in production (32% vs. 13% for juniors). Cybersecurity researchers have exposed arbitrary code execution vulnerabilities and inadvertent exposure of sensitive data in popular vibe-coding platforms; Databricks’ AI Red Team demonstrated how simple AI-generated game code could introduce remote code execution flaws.

Generational Divide and Market Adaptation

Despite the risks, corporate demand for vibe-coding proficiency is soaring. Visa, Reddit, and DoorDash now require candidates to demonstrate AI-code skills, and some startups mandate that 50% of code in their repositories be AI-generated. Y Combinator reports that 25% of its current batch firms maintain codebases that are 95% AI-generated, spawning a niche of “vibe coding cleanup specialists” dedicated to auditing and hardening AI-produced code.

Balancing Speed and Stability

As vibe coding spreads from boardrooms to dev teams, the tension between rapid innovation and code security intensifies. Executives celebrate accelerated prototyping, but research suggests the industry may be sacrificing long-term reliability for short-term velocity—raising the urgent need for robust auditing tools, developer training, and revised security practices to ensure AI-driven code stands up to real-world threats.